Token e Embedding: conceitos da IA e LLMs que estão no SEO

Token e Embedding são conceitos muito em foco hoje em dia, seja nos estudos e aplicação de Inteligência Artificial (IA) e dos Modelos de Linguagem de Grande Escala (LLMs), seja no desenvolvimento de agentes, aplicações, ferramentas e de negócios, mas que estão presentes no SEO já há muito tempo! Você poderia me dizer: mas Alex, como assim? Eu só comecei a ouvir falar disso depois que os GPTs da vida tomaram conta de tudo!

Então fica comigo, vou te mostrar como isso funcionava, e como funciona hoje, no cenário das buscas. Vamos começar do início com os tokens.

O que são tokens?

Um token é uma unidade individual de um texto. Imagine uma frase que foi quebrada em suas menores partes significativas, essas partes são os tokens. Para ilustrar vamos pegar uma frase simples:

Na frase: “A Busca Semântica melhora a qualidade da busca.”

Quando aplicarmos a tokenização básica (separação por espaços e pontuação), os tokens seriam:

[“A”, “Busca”, “Semântica”, “melhora”, “a”, “qualidade”, “da”, “busca”, “.”]

Nesse exemplo, cada palavra e o ponto final são considerados tokens distintos, levando em conta que esse sistema faz a tokenização básica. Os sistemas mais sofisticados poderiam, por exemplo, tratar “Busca Semântica” como um único token se fosse uma entidade nomeada ou um conceito frequentemente pesquisado, e até mesmo se tivesse no corpus de textos usados para o treinamento.

Antigamente, no contexto da busca tradicional, ou busca por palavra-chave (token-based search), o sistema funcionava dividindo o texto nesses tokens. Eles erão então usados para criar um tipo de representação numérica chamada embedding esparso. Pense neste tipo de embedding como uma longa lista que mostra quantas vezes cada palavra ou subpalavra aparece em um texto.

A principal característica aqui é que os embeddings esparsos não consideram o significado das palavras, apenas a frequência de suas aparições. É como um índice de biblioteca, onde você procura por palavras-chave exatas.

Para ilustrar novamente, vamos pensar que temos uma frase como a do exemplo acima. Então ela é “tokenizada” (dividida em tokens) para que o sistema possa indexá-la e compará-la com as palavras exatas da sua consulta. Existem algoritmos clássicos usados para gerar os embeddings esparsos, como o TF-IDF (Term Frequency-Inverse Document Frequency), BM25 ou SPLADE.

O TF-IDF, por exemplo, dá mais peso a palavras que são frequentes em um documento específico, mas raras no corpus geral, destacando sua importância para aquele documento. Mas de uma forma geral, todos eles consideram somente a frequência das palavras.

Historicamente, a busca era mais determinística, ou seja, o conteúdo era indexado da mesma forma que entrava, sem muita interpretação por parte dos algoritmos. Os documentos eram “decompostos” de uma forma que chamamos de “lexical”, basicamente contando a distribuição das palavras. O que contrasta com o modelo de recuperação da informação atual que é semântico. E para chegarmos na semântica um outro conceito é imprescendível: os embeddings!

O que são Embeddings (Densos)?

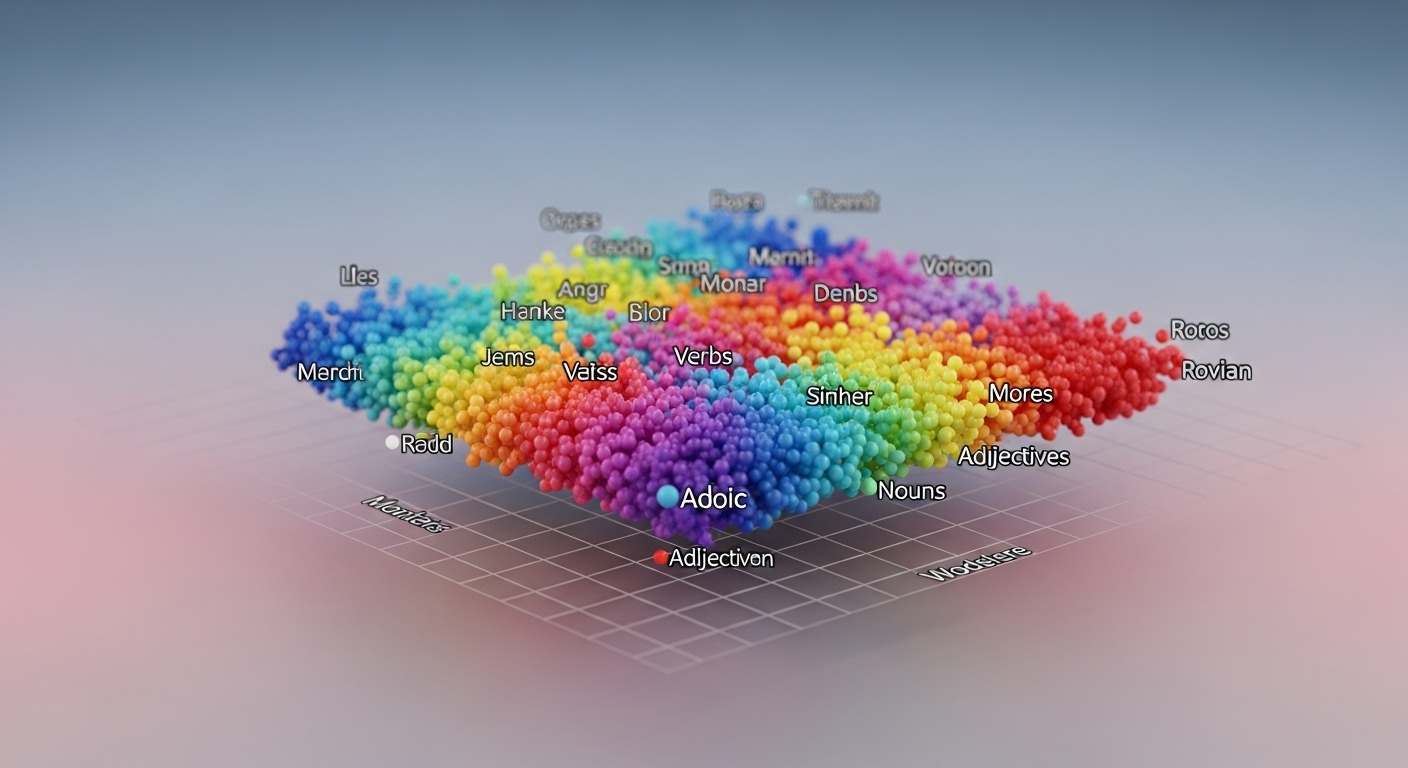

Um embedding, por outro lado, é uma representação numérica de palavras ou textos, especificamente como vetores numéricos, que capturam as relações semânticas e as informações contextuais. Imagine cada palavra ou texto como um ponto em um “mapa” multidimensional, onde a proximidade entre os pontos indica similaridade de significado. A distância e a direção entre esses vetores codificam o grau de similaridade semântica entre as palavras.

Por que então precisamos disso, você pode me perguntar.

Porque a maioria dos algoritmos de Machine Learning não consegue processar texto bruto, sem um tratamento, eles precisam usar números como entrada. É aqui que os embeddings são usados.

Então, esses embeddings são criados por modelos de embedding (eles por si só já dão um outro artigo), que são treinados varrendo grandes volumes de texto, como toda a Wikipedia, por exemplo, e daí surge o termo LLMs: Modelos de Linguagem em grande escala. Você usa esse volume absurdo de texto para que esses modelos possam aprender as relações entre as palavras e seus contextos.

Esse processo envolve:

- Pré-processamento: tokenização e remoção de “stop words” (palavras comuns como “o”, “a”, “e”) e pontuação.

- Janela de contexto deslizante: identifica as palavras-alvo e seus contextos para que o modelo aprenda suas relações.

- Treinamento: o modelo é treinado para prever palavras com base em seu contexto, posicionando palavras semanticamente semelhantes próximas umas das outras no espaço vetorial. Os parâmetros do modelo são ajustados para minimizar erros de previsão.

Esses embeddings também são conhecidos como embeddings densos e eles tem esse nome porque as matrizes que os representam contêm principalmente valores diferentes de zero, ao contrário dos esparsos. Eu demorei muito para entender esse conceito, mas reduzindo a história ao meu entendimento, se usa estatística para adensar uma quantidade grande de pontos nesse gráfico, agrupando por similaridade, o que ajuda em vários aspectos, entre eles a performance do sistema. Pelo o que eu compreendi, elimino os 0 e busco os valores com significado.

Me corrige nos comentários, por favor, caso tenha falado bobagem aqui.

Mas o que importa para o nosso artigo é que eles são extremamente eficientes para criar modelos que entendem o significado e o contexto das palavras.

Por exemplo, em um sistema que usa um desses modelos uma busca por “filme” pode também retornar resultados relevantes com “cinema” ou “longa-metragem”, pois o modelo de embedding entende que essas palavras possuem significados semelhantes. Isso melhora significativamente a qualidade da busca.

Embeddings na recuperação da informação: um assunto antigo

O Google há anos já incorpora essa tecnologia em sua busca!

O RankBrain, lançado lá em 2015, foi o primeiro sistema de deep learning implantado na busca, que naquela época já ajudava a entender como as palavras se relacionam a conceitos.

Em 2018, o Neural Matching permitiu entender como as consultas se relacionam às páginas olhando a consulta ou página inteira, e não apenas palavras-chave.

O BERT, em 2019, foi um grande avanço na compreensão da linguagem natural, auxiliando a entender como combinações de palavras expressam diferentes significados e intenções.

E o MUM, de 2021, foi lançado como um avanço por ser mil vezes mais poderoso que o BERT, capaz de entender e gerar linguagem, sendo multimodal (texto, imagens, etc.) e treinado em 75 idiomas. Aqui foi inaugurada a busca multimodal, ou seja, vários tipos de conteúdo, não só texto, eram transformados em embeddings. Transformados, isso te lembra algo?

Para otimização deste processo, os documentos também são decompostos em um nível de embeddings vetoriais para indexação. Vamos então organizar isso tudo numa tabela para poder entender melhor? Foi o que eu fiz para entender.

Diferenças fundamentais entre tokens e embeddings:

| Característica | Token | Embedding (Denso) |

|---|---|---|

| Representação | Unidades de texto brutas (palavras, subpalavras) | Vetores numéricos |

| Foco | Frequência de palavras e sintaxe do texto | Significado semântico e contexto |

| Similaridade | Baseada em palavras-chave exatas e sua distribuição | Baseada na proximidade de significado no espaço vetorial |

| Uso Principal | Busca tradicional por palavra-chave (busca lexical) | Busca semântica e aplicações de IA que exigem compreensão de significado |

| Dimensionalidade | Pode ter dezenas de milhares de dimensões, com muitos zeros (esparso) | Geralmente centenas ou milhares de dimensões, com valores predominantemente não-zero (denso) |

| Exemplos | TF-IDF, BM25, SPLADE | Modelos como Word2Vec, GloVe, e os mais recentes como BERT, MUM, Gemini |

Busca híbrida, IA, tokens e embeddings

A grande sacada dessa mudança é que, para uma recuperação de busca eficiente com Inteligência Artificial, não se usa apenas um ou outro, mas sim uma combinação estratégica: a Busca Híbrida. Para você que quer saber o que é isso, clica no link que eu coloquei, ele vai lhe levar para um artigo no Linkedin, que veio de uma pesquisa que eu fiz sobre o assunto.

Mas em resumo, a busca híbrida combina a busca semântica com a busca vetorial para suprir uma necessidade muito específica: conseguir encontrar similaridades fora de um domínio do conhecimento e conseguir fazer o sistema que você criou e treinou, entenda entidades fora dele.

Por que você precisa de uma busca híbrida?

Você vai precisar em casos muito específicos, como por exemplo, se for criar um agente que vai interagir com os seus clientes e eles podem fazer perguntas fora do domínio do conhecimento que o seu modelo foi treinado. Pense no seu negócio, existe essa possibilidade? Então é bom ficar por dentro desse modelo de busca.

A busca semântica, apesar de muito eficaz, tem uma desvantagem: ela pode ter dificuldades com informações “fora do domínio”, ou seja, dados nos quais o modelo de embedding não foi treinado. Lembra quando os prompts do Claude e do ChaGPT vazaram e vimos que eles fazem buscas fora do treinamento? É para suprir essa falta mas também inclui, por exemplo, números de produtos específicos, novos nomes de produtos ou códigos internos de empresas.

Nesses casos, a busca semântica “fica vazia” porque só consegue encontrar o que já “conhece”. E se o usuário precisa de algo fora do que o modelo sabe ele recorre a busca token-based, com o objetivo de preencher essa lacuna.

A busca híbrida, ao integrar a busca semântica (para consultas mais sutis e contextuais) com a busca tradicional por palavras-chave (para termos específicos e fora do domínio), busca o “melhor dos dois mundos”, garantindo uma experiência de busca mais abrangente e precisa, por conta da necessidade específica dos modelos de IA, o que não acontecia no Google antes do AI Overview.

Será que é por conta disso que o Google demorou tanto para entrar nesse barco?

Vamos resumir então?

Os tokens são a base lexical da linguagem, enquanto embeddings densos são a representação numérica do seu significado. A busca moderna, intermadiada por algoritmos e IA, podem utilizar ambos, como no caso da busca híbrida, mas existe uma tendência de crescente de focar nos embeddings. O fato deles ajudaram os modelos a entender o contexto e a intenção, e aumentar capacidade de “raciocínio” dos modelos de linguagem torna a sua escolha mais do que óbvia.

Parte do nosso trabalho de especialistas em buscas é estruturar os dados e o conteúdo de forma que esses sistemas possam compreendê-los, raciocinar sobre eles e apresentá-los de forma eficaz, inclusive de maneira hiperpersonalizada. Está começando a era é da IA Agente, e nosso próximo “cliente” é justamente um desses agentes.

Publicar comentário