Porque a Helpful Content Update irá priorizar os especialistas?

A mais nova atualização do Google vem, junto da MUM, da Bert e de várias outras, priorizar o conteúdo. Desde o Hummingbird o Google – e seus demais competidores-admiradores – tentam tornar a busca o mais semântica possível. E na falta de adesão – ou mal uso – do Schema.org, é no <main> que eles passam a olhar.

Nesse contexto, o Helpful Content Update (HCU) se propõe a identificar o conteúdo de qualidade produzido por humanos, para humanos. É uma proposta destemida. Coloca o Google cara a cara com as IAs produtoras de conteúdo, de um lado, e os maus produtores de conteúdo, do outro. Para isso, ele vai precisar buscar apoio em um grupo que ganha mais relevância para o Google a cada ano: os especialistas.

Aqui, vamos explicar a tese dos especialistas como o grupo preferido pelo Google, e como isso impacta a produção de conteúdo. Segue o raciocínio:

Objetivo da Helpful Content Update

A atualização vem, como outras que vieram antes dela, para defender o maior asset do Google: a confiabilidade. O valor dos buscadores vem da sua capacidade de atender à uma demanda. Cumprir um combinado. E esse combinado é entregar a resposta para o usuário.

Diferente do McDonald’s, onde vamos esperando um sabor específico de hambúrguer, no Google vamos esperando a resposta de uma pergunta. Como um fast food, queremos ela rápida, quente e saborosa.

Enquanto empresa que busca lucro, o Google precisa garantir a relevância dos seus ativos. O maior deles é o algoritmo de indexação. Valorizando-o, e é aqui que o HCU entra, a empresa ganha financeiramente e ganha em outra frente que não podemos esquecer: politicamente.

HCU e a valorização financeira

Olhando para os números do Google, a companhia segue valorizando e investindo em novas formas de negócio. O “Other Bets”, ou “outras apostas”, segmento de investimento do Google, dá prejuízo contínuo há anos. Isso ocorre pois eles apostam. Acreditam que uma das dezenas de startups compradas ou modelos de negócio que criam vai dar retornos monstruosos.

O HCU vai na contramão: é a aposta no principal ativo da empresa. Seus resultados vão impactar o principal canal de entrada de capital da companhia. E por isso, sua relevância não é baixa.

HCU e a valorização política

Um risco constante para as empresas é quando elas começam a se relacionar com a política. No Brasil, empresários tiveram seus sigilos fiscais quebrados, em um movimento que impactará suas empresas. A imagem das mesmas já fica manchada.

Por outro lado, escândalos como os das empresas de Eike Batista mostram os riscos de lidar com política. Os buscadores são alguns dos principais responsáveis pela tomada de decisão hoje. O debate da Band, primeiro debate presidencial do ano, colocou métricas do YouTube lado a lado de jornalistas de carreira. Buscadores são políticos.

O HCU entra na jogada como – também – uma estratégia para se isentar politicamente. Não é atoa que a ferramenta alega “fazer a média das opiniões de especialistas”. Uma expertise que desenvolveu antes e vingou durante a pandemia, também vai ser usada nos contextos mais específicos dos nichos.

Como funciona o Helpful Content Update

Os impactos e pormenores do HCU ainda estão por vir. A atualização, que não completou ainda sua varredura completa, vai impactar os sites. Os Gurus do SEO vão atrás de cases para atestar sua qualidade e vender o “how to” do processo. E até lá, talvez o John Mueller fale alguma coisa.

O que podemos verificar é o histórico do Google e o que já foi feito, além dos objetivos (que listamos acima). O HCU surge na sequência do MUM. O Multitask Unified Model cria o consenso entre especialistas a partir de uma IA, para então entregar os resultados.

Ambos fazem parte do mesmo movimento por parte do Google: um que é tanto algorítmico, quanto político.

Os aspectos que sabemos que o Google analisa são os metadados das páginas. Sua boa estruturação, partindo de um ponto de vista semântico, irá otimizar a indexação da página. O que conseguimos perceber é que o conteúdo – o corpo da página ou artigo – entrará na baila, com termos de autoridade e termos de referência sendo calibrados para informar ao algoritmo o grau de expertise do autor.

E é justamente na interface entre metadados e conteúdo que os especialistas ganham dos generalistas.

Metadados de autor para o HCU

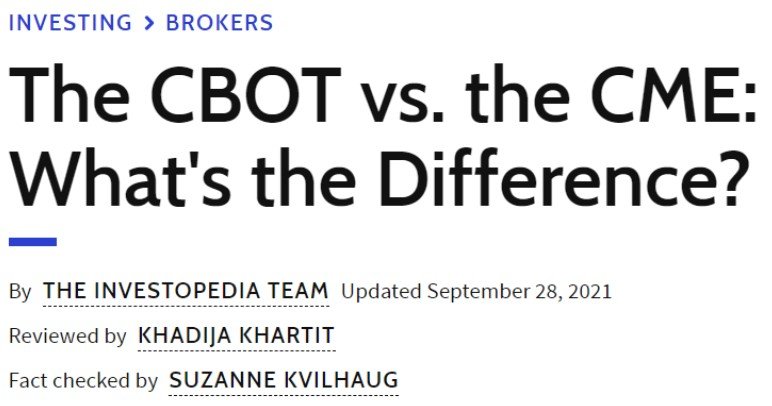

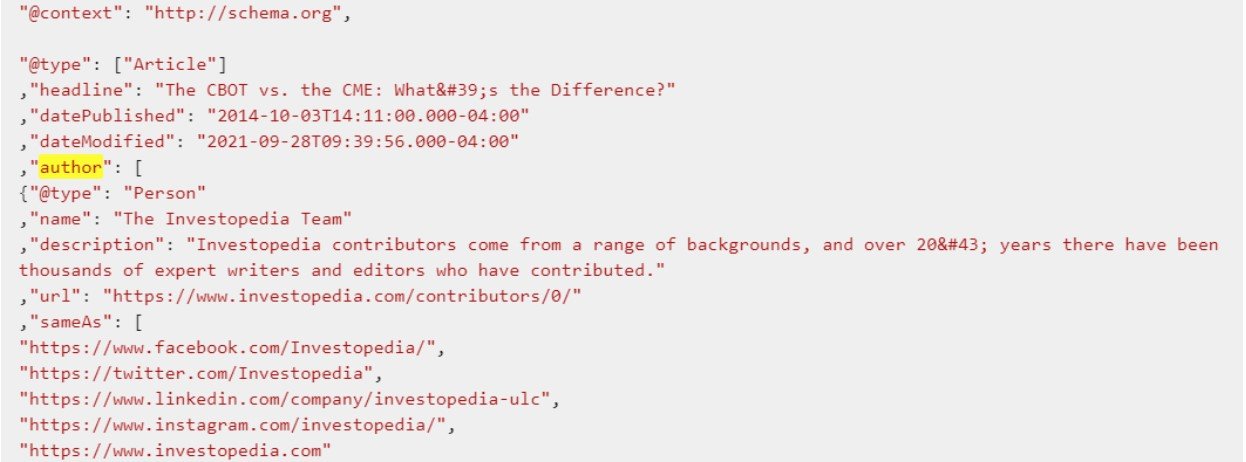

Os metadados de autor são tão mais detalhados quanto maior a autoridade do portal. No meio de finanças, por exemplo, poucos estão à frente da Investopedia em língua inglesa. E a sua estrutura semântica de autor, revisor e fact-checker nos metadados é impecável.

The CBOT vs. the CME: What’s the Difference? (investopedia.com)

Aqui vemos a que ponto de detalhe o Investopedia chega na hora de definir seus autores. O grau de especificidade não muda para o revisor e o checador de fatos. Argumentos de autoridade e outros metadados (links para redes sociais e sites, por exemplo) são usados em abundância. O objetivo? Construir credibilidade para o mecanismo.

Nesse ponto entramos na conjectura, mas considerando que esses dados tendem a se repetir pelas páginas, seria um grande desperdício não usá-los para avaliar rankings e cruzar estatísticas. Se eu sei que o “Investopedia Team” publica dezenas de artigos sobre o tema mensalmente, e que ele é lido, acessado e atende à demanda por informação dos leitores, logo a entidade Investopedia deve ter algum valor. Certo?

A partir desse ponto os metadados do autor ganham semântica. O Investopedia Team escreve dessa maneira (artigo 1). E também desta outra (artigo 2). Depois de 40, 60 artigos, ela ganha identidade. Porém, se eu coloco 60 leigos para escrever esses artigos, o que eu tenho? Inconsistência e incompetência.

Como otimizar os metadados de autor

Os metadados de autor do HCU podem ser otimizados. O importante é lembrar que esses dados dão contexto semântico, podendo fazer referência a outras entidades. Quanto mais próximas do seu universo semântico, mais relevantes são suas menções nos metadados do autor.

Esse pequeno checklist pode te ajudar a montar a biografia do seu metatag:author.

- Instituições de ensino – sigla, curso e universidade (se houve);

- Anos de experiência;

- Locais onde já trabalhou;

- Cargos que já ocupou;

- Artigos publicados (científicos à parte);

- Livros publicados.

Qualidade do conteúdo autoral

Agora será analisada a qualidade do conteúdo autoral. Uma vez que eu escreva meus textos, eu garanto a uniformidade. Certas expressões, figuras de linguagem e anedotas tendem a se repetir. Os assuntos no qual sou expert também aparecem mais. Ficam mais claros. E eu facilito o trabalho do buscador.

Consequentemente, caso eu seja uma autoridade, o metadado de autor associado a mim tende a receber esse score. Está atrelado a um produto valioso. Uma informação relevante. E o próximo texto de qualidade endossa essa impressão. Assim como o próximo. E o próximo. E por aí vai.

O autor – do metadado e de carne e osso – precisará conjugar tanto a experiência de conteúdo (termo que vi pela primeira vez em post de Gustavo Rodrigues) quanto a qualidade do conteúdo. No mercado de finanças, isso é muito comum. Termos como Capex, lucro líquido, amortização e análise técnica são para os “iniciados”, e marcam competência. Por outro lado, afastam o grosso dos leitores.

Como otimizar seu content experience

Diferente do que se fazia antes, as atualizações pedem que você não mais invista em repetir a intervalos regulares a palavra-chave. Sequer pense em palavras-chave. O objetivo será dar contexto à informação de forma agradável.

Aqui cabe entender a dicotomia entre fácil e difícil x simples e complexo. O que é complexo pode ser fácil, e o que é simples, pode ser difícil. O objetivo do content experience será otimizar o conteúdo complexo para que ele seja cada vez mais fácil. E entender que o simples não tem desculpa para ser difícil.

Pensamos em algumas estratégias que vão auxiliar a desenvolver a experiência de consumo de informação:

- Tenha claro a intenção de busca: “Que horas são” não pede uma resposta acima de 5 dígitos (incluindo os “:”). Já “como fazer SEO semântico no meu blog” pode pedir mais de 3K palavras. Essa intenção vai nortear seu conteúdo.

- Busque o dialogismo: O conteúdo agradável é o dialógico. Não à toa queremos falar com alguém quando temos um problema com o banco. Ou sentimos um bloqueio em aulas assíncronas e remotas. O dialogismo aparece em frases curtas, no meio termo da formalidade e fugindo das palavras complexas.

- Redija e revise: O conteúdo original, a primeira versão, raramente será a melhor. Mesmo em anos de prática, haverá necessidade de rever o conteúdo. Podendo, desprenda umas horas entre a finalização e a revisão. O ideal é um dia inteiro.

- Gramática importa: Um texto errado é doloroso. Como uma rua acidentada. A vista pode ser maravilhosa, mas ele dificulta o entendimento. Definitivamente, não se preocupa com o usuário.

- Se mantenha no seu escopo: Diferente do que as redes pregam, não buscamos informação de quem sabe tudo sobre tudo. Pelo menos, o Google não quer. Então mire na sua expertise. Valorize o seu estudo.

Sinalize limites: Já que não sabemos de tudo, deixe isso explícito. “Não entraremos nesse mérito pois não somos (insira aqui uma profissão)”. Esse gesto acentua a sua credibilidade. Mostra que você sabe até onde consegue ir.

Como fazer ambas trabalharem juntas?

Os metadados do autor e a qualidade do conteúdo precisam trabalhar juntos para informar ao Google que você merece ser lido. Nesse ínterim, existem formas de fazê-los trabalhar juntos, e essa forma fica clara no contexto do Investopedia: revisão.

O argumento de metadado “reviewedby” permite a injeção de um segundo autor, permitindo duas combinações possíveis:

| Author | Revisor | Perfil |

| Redator SEO | Especialista | Apurador |

| Especialista | Redator SEO | Qualificador |

No perfil “Apurador”, o Especialista vai trabalhar em cima de uma Redação SEO. Ele precisa ter alguma noção sobre o “como” e “porque” de um argumento, mas trará precisão no uso dos termos. A liberdade artística fica um pouco podada, mas a precisão dos fatos traz credibilidade.

Já no perfil “Qualificador”, o produto informativo terá um Especialista redigindo algo mais técnico, e o Redator SEO irá otimizá-lo. Isso vai levar a anedotas ou storytelling surgindo no meio do texto, endossado pelas explicações técnicas. Também favorece algo no qual o Google já investe: a checagem de fatos.

Desde o lançamento da busca por checagens no Google e os contratos entre a empresa e a Poynter, a checagem de fatos ganha atenção preponderante pelo gigante das buscas. Isso leva a um cenário onde a validação por terceiros é incentivada. E é isso que um revisor faz: traz o consenso para os metadados de uma página.

Este artigo foi escrito por Victor Gabry, UX Researcher e Redator SEO na Americanas.com. Victor busca se especializar nas áreas de tecnologia (SaaS) e finanças para tornar assuntos técnicos em algo fácil de consumir.